A brain–computer interface device synthesizes speech using the neural signals that control lip, tongue, larynx and jaw movements, and could be a stepping stone to restoring speech function in individuals unable to speak.

Speaking might seem an effortless activity, but it is one of the most complex actions that we perform. It requires precise, dynamic coordination of muscles in the articulator structures of the vocal tract — the lips, tongue, larynx and jaw. When speech is disrupted as a consequence of stroke, amyotrophic lateral sclerosis or other neurological disorders, loss of the ability to communicate can be devastating. In a paper in Nature, Anumanchipalli et al.1 bring us closer to a brain–computer interface (BCI) that can restore speech function.

Brain–computer interfaces aim to help people with paralysis by ‘reading’ their intentions directly from the brain and using that information to control external devices or move paralysed limbs. The development of BCIs for communication has been mainly focused on brain-controlled typing2, allowing people with paralysis to type up to eight words per minute3. Although restoring this level of function might change the lives of people who have severe communication deficits, typing-based BCIs are unlikely to achieve the fluid communication of natural speech, which averages about 150 words per minute. Anumanchipalli et al. have developed an approach in which spoken sentences are produced from brain signals using deep-learning methods.

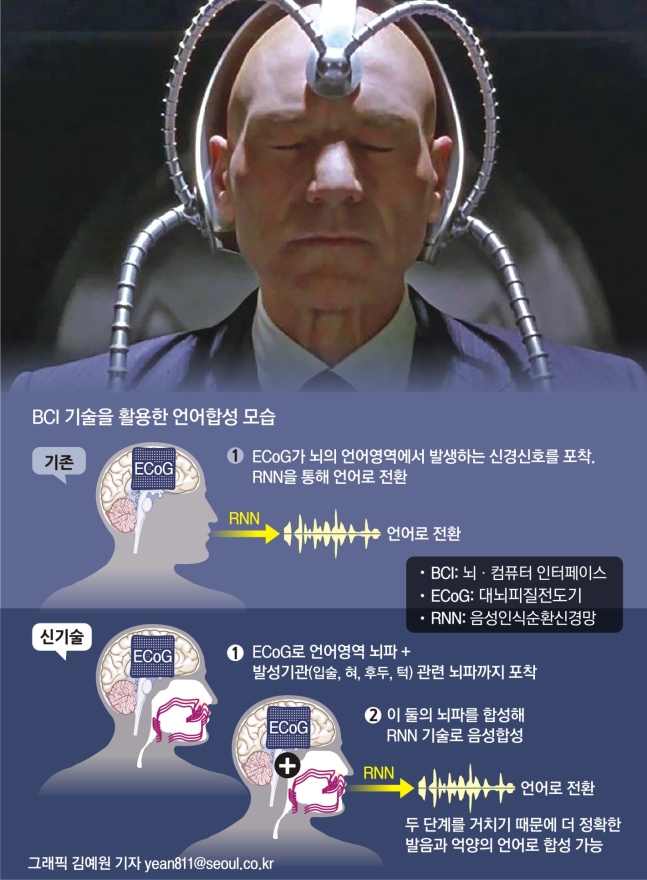

The researchers worked with five volunteers who were undergoing a procedure termed intracranial monitoring, in which electrodes are used to monitor brain activity as part of a treatment for epilepsy. The authors used a technique called high-density electrocorticography to track the activity of areas of the brain that control speech and articulator movement as the volunteers spoke several hundred sentences. To reconstruct speech, rather than transforming brain signals directly into audio signals, Anumanchipalli et al. used a two-stage decoding approach in which they first transformed neural signals into representations of movements of the vocal-tract articulators, and then transformed the decoded movements into spoken sentences (Fig. 1). Both of these transformations used recurrent neural networks — a type of artificial neural network that is particularly effective at processing and transforming data that have a complex temporal structure.

Figure 1 | Brain–computer interfaces for speech synthesis. a, Previous research in speech synthesis has taken the approach of monitoring neural signals in speech-related areas of the brain using an electrocorticography (ECoG) device and attempting to decode these signals directly into synthetic speech using a type of artificial neural network called a recurrent neural network (RNN). b, Anumanchipalli et al.1 developed a different method in which RNNs are used for two steps of decoding. One of these decoding steps transforms neural signals into estimated movements of the vocal-tract articulators (red) — the anatomical structures involved in speech production (lips, tongue, larynx and jaw). For training purposes in the first decoding step, the authors needed data that related each person’s vocal-tract movements to their neural activity. Because Anumanchipalli et al. could not measure each person’s vocal-tract movements directly, they built an RNN to estimate these movements on the basis of a large library of previously collected data4 of vocal-tract movements and speech recordings from many people. This RNN produced vocal-tract movement estimates that were sufficient to train the first decoder. The second decoding step transforms these estimated movements into synthetic speech. Anumanchipalli and colleagues’ two-step decoding approach produced spoken sentences that had markedly less distortion than is obtained with a comparable direct decoding approach.

Learning how brain signals relate to the movements of the vocal-tract articulators was challenging, because it is difficult to measure these movements directly when working in a hospital setting with people who have epilepsy. Instead, the authors used information from a model that they had developed previously4, which uses an artificial neural network to transform recorded speech into the movements of the vocal-tract articulators that produced it. This model is not subject-specific; rather, it was built using a large library of data collected from previous research participants4. By including a model to estimate vocal-tract movements from recorded speech, the authors could map brain activity onto vocal-tract movements without directly measuring the movements themselves.

Several studies have used deep-learning methods to reconstruct audio signals from brain signals (see, for example, refs 5, 6). These include an exciting BCI approach in which neural networks were used to synthesize spoken words (mostly monosyllabic) directly from brain areas that control speech6. By contrast, Anumanchipalli and colleagues split their decoding approach into two stages (one that decodes movements of the vocal-tract articulators and another that synthesizes speech), building on their previous observation that activity in speech-related brain areas corresponds more closely to the movements of the vocal articulators than to the acoustic signals produced during speech4.

The authors’ two-stage approach resulted in markedly less acoustic distortion than occurred with the direct decoding of acoustic features. If massive data sets spanning a wide variety of speech conditions were available, direct synthesis would probably match or outperform a two-stage decoding approach. However, given the data-set limitations that exist in practice, having an intermediate stage of decoding brings information about normal motor function of the vocal-tract articulators into the model, and constrains the possible parameters of the neural-network model that must be evaluated. This approach seems to have enabled the neural networks to achieve higher performance. Ultimately, ‘biomimetic’ approaches that mirror normal motor function might have a key role in replicating the high-speed, high-accuracy communication typical of natural speech.

The development and adoption of robust metrics that allow meaningful comparisons across studies is a challenge in BCI research, including the nascent field of speech BCIs. For example, a metric such as the error in reconstructing the original spoken audio might have little correspondence to a BCI’s functional performance; that is, whether a listener can understand the synthesized speech. To address this problem, Anumanchipalli et al. developed easily replicable measures of speech intelligibility for human listeners, taken from the field of speech engineering. The researchers recruited users on the crowdsourcing marketplace Amazon Mechanical Turk, and tasked them with identifying words or sentences from synthesized speech. Unlike the reconstruction error or previously used automated intelligibility measures6, this approach directly measures the intelligibility of speech to human listeners without the need for comparison with the original spoken words.

Anumanchipalli and colleagues’ results provide a compelling proof of concept for a speech-synthesis BCI, both in terms of the accuracy of audio reconstruction and in the ability of listeners to classify the words and sentences produced. However, many challenges remain on the path to a clinically viable speech BCI. The intelligibility of the reconstructed speech was still much lower than that of natural speech. Whether the BCI can be further improved by collecting larger data sets and continuing to develop the underlying computational approaches remains to be seen. Additional improvements might be obtained by using neural interfaces that record more-localized brain activity than that recorded with electrocorticography. Intracortical microelectrode arrays, for example, have generally led to higher performance than electrocorticography in other areas of BCI research3,7.

Another limitation of all current approaches for speech decoding is the need to train decoders using vocalized speech. Therefore, BCIs based on these approaches could not be directly applied to people who cannot speak. But Anumanchipalli and colleagues showed that speech synthesis was still possible when volunteers mimed speech without making sounds, although speech decoding was substantially less accurate. Whether individuals who can no longer produce speech-related movements will be able to use speech-synthesis BCIs is a question for future research. Notably, after the development of the first proof-of-concept studies of BCIs to control arm and hand movements in healthy animals, similar questions were raised about the applicability of such BCIs in people with paralysis. Subsequent clinical trials have compellingly demonstrated rapid communication, control of robotic arms and restoration of sensation and movement of paralysed limbs in humans using these BCIs8,9.

Given that human speech production cannot be directly studied in animals, the rapid progress in this research area over the past decade — from groundbreaking clinical studies that probed the organization of speech-related brain regions10 to proof-of-concept speech-synthesis BCIs6 — is truly remarkable. These achievements are a testament to the power of multidisciplinary collaborative teams that combine neurosurgeons, neurologists, engineers, neuroscientists, clinical staff, linguists and computer scientists. The most recent results would also have been impossible without the emergence of deep-learning and artificial neural networks, which have widespread applications in neuroscience and neuroengineering11–13.

Finally, these compelling proof-of-concept demonstrations of speech synthesis in individuals who cannot speak, combined with the rapid progress of BCIs in people with upper-limb paralysis, argue that clinical studies involving people with speech impairments should be strongly considered. With continued progress, we can hope that individuals with speech impairments will regain the ability to freely speak their minds and reconnect with the world around them.

Nature 568, 466-467 (2019)

(원문: 여기를 클릭하세요~)

이 장치 일찍 나왔다면… 호킹 박사의 영국식 억양도 들었을 텐데

생각대로 발음하는 음성 합성 기술 개발[서울신문]

뇌에 전극 이식해 단어·문자로 재구성

억양 변화 못 시키는 기존 장치와 달리

발성기관 움직임 관련 뇌 신호까지 추출

언어의 리듬·성별·정체성까지 조절 가능

텔레파시는 언어가 아닌 뇌파와 같은 비언어적 방식으로 타인과 소통하는 행위이다. SF에서나 등장하는 것으로 알려져 있지만 최근 BMI(뇌·기계 인터페이스)와 BCI(뇌·컴퓨터 인터페이스) 기술의 발달로 현실화되고 있다. 영화 ‘엑스맨’에 등장하는 프로페서X가 특수 장치(세레브로)를 이용해 텔레파시로 소통하는 모습. 마블스튜디오 제공

지난해 3월 타계한 세계적인 물리학자 스티븐 호킹은 1985년 급성 폐렴으로 사경을 헤매다가 기관지 절개수술을 받고 겨우 살아났다. 대신 웃음소리를 제외한 자신의 목소리를 잃고 컴퓨터 음성합성기를 통한 목소리를 갖게 됐다. 호킹 박사처럼 루게릭병이나 뇌졸중, 외상성 뇌손상, 파킨슨병, 다발성 경화증 같은 퇴행성 신경질환을 앓는 사람들은 말을 할 수 없게 되는 경우가 많아 언어전환 장치를 사용하곤 한다. 이 장치는 눈이나 미세한 몸짓으로 컴퓨터 커서를 작동시키거나 화면의 글자를 선택해 말을 하거나 글을 쓸 수 있게 해 준다. 일반인이 분당 100~150단어를 말하는 것에 비해 분당 10단어 정도밖에 표현할 수 없어서 대화에 빠르게 끼어들지도 못하고 언어의 톤이나 억양을 변화시킬 수도 없다.

그러나 최근 뇌과학의 발달로 뇌신경 손상으로 인해 말을 하거나 글을 쓸 수 없는 환자들이 머릿속에서 말하고자 하는 내용을 밖으로 끄집어낼 수 있는 방법들이 속속 연구되고 있다.

지난 1월 미국 컬럼비아대, 호프스트라 노스웰 의대 공동연구팀은 뇌 속에 전극을 이식해 얻은 신호를 신경망 컴퓨터를 이용해 단어와 문자로 재구성하는 데 성공하고 생물학 분야 출판 전 논문공개 사이트인 ‘바이오아카이브’(bioRxi)에 발표하기도 했다.

이번에는 미국 캘리포니아 샌프란시스코대(UCSF) 신경외과, 웨일신경과학연구소, 캘리포니아 버클리대(UC버클리)·UCSF 조인트생명공학프로그램 공동연구팀이 뇌·컴퓨터 인터페이스(BCI) 기술을 활용해 머릿속에서 생각하는 것을 언어로 변환시킬 수 있는 해독기술을 개발하고 세계적인 과학저널 ‘네이처’ 25일자에 발표했다.

연구팀은 뇌 부위에 칩을 심어 언어 관련 뇌파만 추출해 언어로 전환하는 기존 방식을 넘어 턱과 후두, 입술, 혀 등 발성기관들의 움직임과 관련된 뇌 신호까지 더해 음성이나 글로 전환시키는 방법을 찾아낸 것이다.

연구팀은 우선 신경외과 수술을 받아 뇌에 전극을 이식했지만 말하는 데 문제가 없는 20~40대 성인남녀 5명에게 ‘잠자는 숲속의 공주’, ‘개구리왕자’, ‘이상한나라의 앨리스’ 같은 책에 나오는 문장들 450~750개씩을 또박또박 읽도록 하면서 발성 기관과 언어 관련 부위 뇌파를 측정했다. 그다음 이들에게 문장을 말할 때 소리를 내지 않고 입만 뻥긋거리면서 읽도록 하거나 눈으로 읽도록 한 뒤 발생하는 뇌파도 측정했다.

이렇게 얻은 데이터를 신경망 기계학습 알고리즘으로 분석해 프로그래밍한 다음 실험 참가자들에게 단어나 짧은 문장을 생각하도록 해 컴퓨터나 인공음성 장치로 출력된 것과의 일치도를 살펴봤다.

그 결과 쉬운 단어나 문장의 경우는 69%를 정확하게 인식하고 기록하거나 표현한다는 사실을 확인했다. 복잡한 단어나 문장에 대한 표현 정확도는 47%로 떨어졌지만 언어의 리듬과 억양, 말하는 사람의 성별과 정체성까지 조절이 가능했다.

연구를 이끈 에드워드 창 UCSF대 신경외과 교수는 “BMI 기술을 이용해 팔과 다리의 운동능력을 상실한 사람을 대상으로 생각대로 사지를 움직일 수 있는 방법들이 많이 연구됐다”며 “실제 임상 적용을 위해서 추가 연구가 필요하겠지만 신경과학과 언어학, 기계학습의 전문지식을 활용한 BCI 기술을 통해 후천적으로 언어를 잃었거나 선천적으로 언어장애를 가진 사람들 모두 인공 성대를 사용해 자신의 생각을 자유롭게 말하고 표현할 수 있는 날이 곧 찾아올 것”이라고 설명했다.

(원문: 여기를 클릭하세요~)