(원문)

Nature talks to Brent Hecht, who says peer reviewers must ensure that researchers consider negative societal consequences of their work.

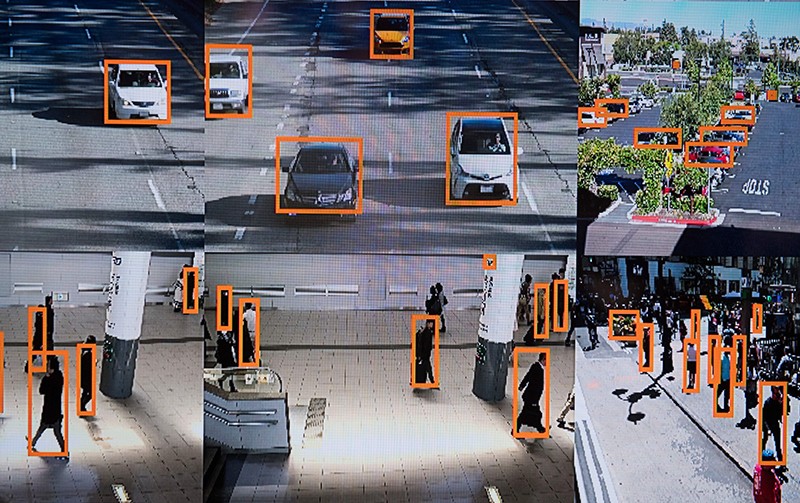

AI vehicle- and person-recognition software is at the centre of an ethical debate. Credit: Saul Loeb/AFP/Getty

In the midst of growing public concern over artificial intelligence (AI), privacy and the use of data, Brent Hecht has a controversial proposal: the computer-science community should change its peer-review process to ensure that researchers disclose any possible negative societal consequences of their work in papers, or risk rejection.

Hecht, a computer scientist, chairs the Future of Computing Academy (FCA), a group of young leaders in the field that pitched the policy in March. Without such measures, he says, computer scientists will blindly develop products without considering their impacts, and the field risks joining oil and tobacco as industries whose researchers history judges unfavourably.

The FCA is part of the Association for Computing Machinery (ACM) in New York City, the world’s largest scientific-computing society. It, too, is making changes to encourage researchers to consider societal impacts: on 17 July, it published an updated version of its ethics code, last redrafted in 1992. The guidelines call on researchers to be alert to how their work can influence society, take steps to protect privacy and continually reassess technologies whose impact will change over time, such as those based in machine learning.

Hecht, who works at Northwestern University in Evanston, Illinois, spoke to Nature about how his group’s proposal might help.

Brent Hecht. Credit: Thomas Mildner

What does the peer-review proposal for computer scientists entail?

It’s pretty simple. When a peer reviewer is handed a paper for a journal or conference, they’re asked to evaluate its intellectual rigour. And we say that this should include evaluating the intellectual rigour of the author’s claims of impact. The idea is not to try to predict the future, but, on the basis of the literature, to identify the expected side effects or unintended uses of this technology. It doesn’t sound that big, but because peer reviewers are the gatekeepers to all scholarly computer-science research, we’re talking about how gatekeepers open the gate.

And should publications reject a paper if the research has potentially negative impacts?

No, we’re not saying they should reject a paper with extensive negative impacts — just that all negative impacts should be disclosed. If authors don’t do it, reviewers should write to them and say that, as a good scientist, they need to fully describe the possible outcomes before they will publish. For panels who decide on research funding, it’s tougher and they might want to have different rules, and consider whether to fund a research proposal if there’s a reasonable suspicion that it could hurt the country.

What drove the Future of Computing Academy to make the proposal?

In the past few years, there’s been a sea-change in how the public views the real-world impacts of computer science, which doesn’t align with how many in the computing community view our work. I’ve been concerned about this since university. In my first ever AI class, we learned about how a system had been developed to automate somethingthat had previously been a person’s job. Everyone said, “Isn’t this amazing?” — but I was concerned about who did these jobs. It stuck with me that no one else’s ears perked up at the significant downside to this very cool invention. That scene has repeated itself over and over again throughout my career, whether that be how generative models — which create realistic audio and video from scratch — might threaten democracy, or the rapid decline in people’s privacy.

How did the field react to the proposal?

A sizeable population in computer science thinks that this is not our problem. But while that perspective was common ten years ago, I hear it less and less these days. They more had an issue with the mechanism. A worry was that papers might be unfairly rejected because an author and reviewer might disagree over the idea of a negative impact. But we’re moving towards a more iterative, dialogue-based process of review, and authors would need to cite rigorous reasons for their concerns, so I don’t think that should be much of a worry. If a few papers get rejected and resubmitted six months later and, as a result, our field has an arc of innovation towards positive impact, then I’m not too worried. Another critique was that it’s so hard to predict impacts that we shouldn’t even try. We all agree it’s hard and that we’re going to miss tonnes of them, but even if we catch just 1% or 5%, it’s worth it.

How can computer scientists go about predicting possible negative effects?

Computer science has been sloppy about how it understands and communicates the impacts of its work, because we haven’t been trained to think about these kinds of things. It’s like a medical study that says, “Look, we cured 1,000 people”, but doesn’t mention that it caused a new disease in 500 of them. But social scientists can really advance our understanding of how innovations impact the world, and we’re going to need to engage with them to execute our proposal. There are some more difficult cases to consider — for instance, in the theory papers that are far from practice. We need to be saying, based on existing evidence, what is the confidence that a given innovation will have a side effect? And if it’s above a certain threshold, we need to talk about it.

What happens now? Are peer reviewers going to start doing this?

We believe that in most cases, no changes are necessary for any peer reviewers to adopt our recommendations — it is already in their existing mandate to ensure intellectual rigour in all parts of the paper. It’s just dramatically underused. So researchers can begin to implement it immediately. But a team from the FCA is also working on more top-down ways of getting reviewers across the field to adopt the proposal, and we hope to have an announcement on this front shortly.

A lot of private technology companies do research that isn’t published in academic outlets. How will you reach them?

Then, the gatekeepers are the press, and it’s up to them to ask what the negative impacts of the technology are. A couple of months after we released our post, Google came out with its AI principles for research, and we were really heartened to see that those principles echo a tonne of what we put in the post.

If the peer-review policy only prompts authors to discuss negative consequences, how will it improve society?

Disclosing negative impacts is not just an end in itself, but a public statement of new problems that need to be solved. We need to bend the incentives in computer science towards making the net impact of innovations positive. When we retire will we tell our grandchildren, like those in the oil and gas industry: “We were just developing products and doing what we were told”? Or can we be the generation that finally took the reins on computing innovation and guided it towards positive impact?