논란의 구글 양자컴퓨터 칩 드디어 공개…”양자우월성 달성했다”

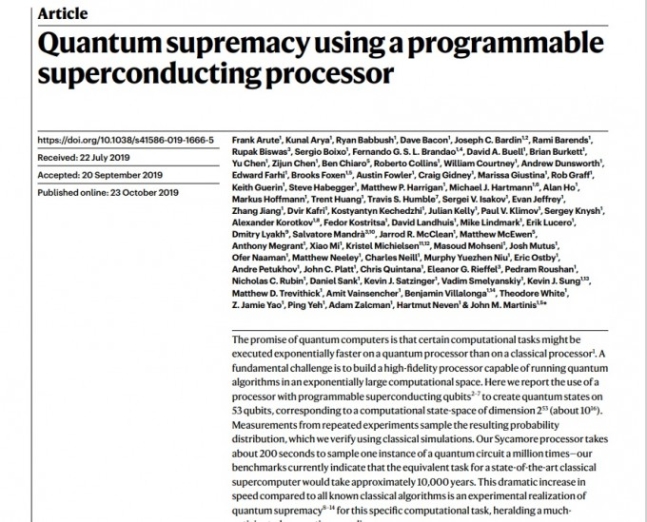

구글 ‘네이처’ 논문 발표…난수 증명 특정 과제에서 슈퍼컴 능가 확인

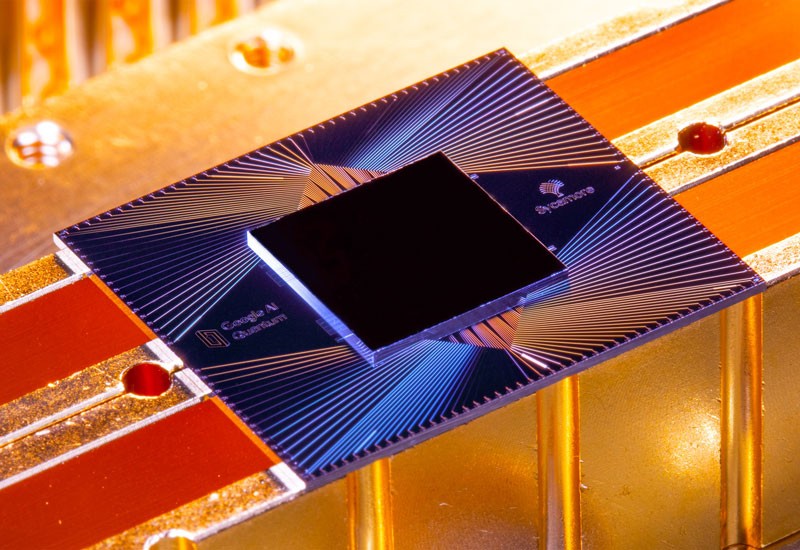

구글이 양자컴퓨터로 기존 컴퓨터를 능가하는 연산 성능을 보이는 이른바 ‘양자우월성’을 달성했다는 논문을 정식 발표했다. 9월 중순부터 한 달 이상 지속돼 온 논란이 일단 가라앉을 것으로 보인다. 구글 AI 블로그 제공

지난 9월, 구글이 새로 개발한 양자컴퓨터 칩 ‘시커모어’가 특정 과제를 푸는 임무에서 현존하는 가장 강력한 슈퍼컴퓨터를 완전히 압도해, 양자컴퓨터가 기존 디지털 컴퓨터의 성능을 일부 넘어서는 ‘양자우월성(양자우위)’을 최초로 달성했다는 내용을 담은 문건이 미국항공우주국(NASA) 사이트에 게시됐다 삭제된 사건이 발생했다. 당시 구글은 현존 최고 슈퍼컴퓨터로 1만 년 걸릴 문제를 양자컴퓨터로 3분 20초(200초)만에 풀었다고 밝혀 과학계와 공학계에 파장을 일으켰다.

이 연구 결과가 동료 평가를 거친 정식 논문으로 드디어 출판됐다. 유출됐던 연구 내용은 전부 맞는 것으로 드러났다. 경쟁사인 IBM은 문건 유출이 보도된 10월 초부터 지난 21일까지 줄곧 “구글은 양자우월성에 도달하지 않았다”고 부정해 왔지만, 적어도 구글이 현존 최고의 양자컴퓨터 칩을 개발해 특정 과제에서 슈퍼컴퓨터보다 뛰어난 성능을 보인 것은 확실해졌다.

존 마르티니스 미국 UC샌타바버라 교수와 구글 인공지능(AI)퀀텀팀은 “기존 최강 슈퍼컴퓨터로 푸는 데 1만 년 걸리는 과제를 3분 20초(200초)만에 푸는 새로운 양자컴퓨터 칩 ‘시커모어(플라타너스라는 뜻)’를 개발하고 성능을 시험하는 데 성공했다”는 내용의 논문을 국제학술지 ‘네이처’ 23일자 온라인판에 공개했다. 네이처가 정규 발행 시간이 아닌 때에 논문을 즉시보도로 공개하는 일은 매우 드물다. 그만큼 이번 논문을 둘러싼 억측과 논란이 치열했음을 보여주는 대목이다.

(관련기사 : 구글 양자컴퓨터, 슈퍼컴 능가했나 ‘양자우월성 달성’ 논란)

구글이 이번에 개발한 시커모어는 53개의 큐비트(양자비트. 양자 정보 최소 단위)를 십(十)자 모양으로 연결해 구현한 최신 양자컴퓨터 칩이다. 절대0도에 가까운 극저온으로 냉각했을 때 저항이 0이 되는 소재인 초전도체 사이에 금속을 끼운 전자소자(조셉슨 소자) 내부에서, 전자 두 개가 하나의 양자처럼 쌍을 이뤄 통과하는 현상을 이용해 계산을 하는 ‘초전도 소자’ 방식을 쓴다. 초전도 방식은 23일 삼성이 투자하기로 한 미국의 양자컴퓨터 기업 ‘아이온큐’가 연구 중인 이온트랩(이온덫) 방식과 함께 현재 가장 유력한 양자컴퓨터 큐비트 구현 방식으로 꼽힌다. 이온트랩은 자기장 등을 이용해 이온을 분리, 제어한 뒤 양자상태를 측정해 양자정보를 처리하는 기술이다.

구글은 시커모어를 이용해 난수를 증명하는 비교적 단순한 알고리즘을 수행하고 성능을 평가했다. 정연욱 한국표준과학연구소 양자기술연구소 책임연구원은 “양자컴퓨터 칩의 성능을 시험하기 위해 고안된 알고리즘 중 하나로, 대부분 기존 컴퓨터보다 양자컴퓨터가 더 뛰어난 성능을 보이게 돼 있다”고 말했다. 하지만 이를 실제로 구현하는 것은 어려운데, 구글은 시커모어로 이 작업을 3분 20초 만에 끝내는 데 성공했다. 구글은 “이 과제는 현존 최강의 슈퍼컴퓨터로도 1만 년 걸리는 문제”라고 밝혀 사실상 이 과제에 한해서는 ‘양자우월성’을 달성했다고 밝혔다. 현존 최강의 슈퍼컴퓨터는 미국 오크리지국립연구소의 ‘서미트’로 2019년 6월 독일 프랑크푸르트에서 개최된 슈퍼컴퓨팅 콘퍼런스가 발표한 세계 슈퍼컴퓨터 성능 순위에서 148페타플롭스(PF)의 연산속도를 발휘했다. 1PF는 1초에 1000조 개의 계산을 할 수 있는 속도다.

구글은 “시커모어는 프로그래밍이 가능한 양자컴퓨터 칩”이라며 “이미 올해 봄 양자우월성을 달성한 뒤 지금은 양자화학과 머신러닝 양자물리 등에 응용하려 시험하고 있다”고 밝혔다.

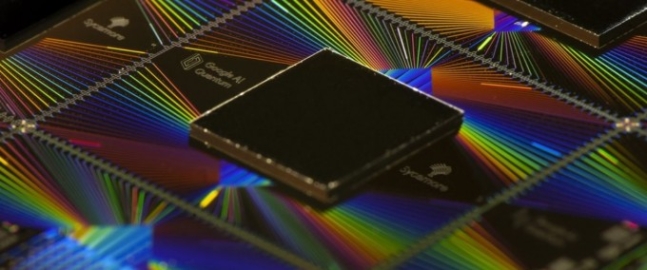

구글이 개발한 양자컴퓨터칩 시커모어의 큐비트 배열을 도식화한 그림이다. 구글 AI 블로그 제공

이번 연구 결과는 논문 정식 발표 이전에 초안이 사전 유출되며 큰 논란을 불러 일으켜 왔다. 논란은 지난달 20일 미국의 경제지 파이낸셜타임스가 구글이 양자우월성에 도달했다고 밝힌 기사를 게재하면서 시작했다. 기사는 지금은 사라진 NASA의 문서를 인용해 구글이 코드명이 시커모어인 양자컴퓨터 칩을 만들었고 슈퍼컴을 능가하는 성능을 보였다고 전했다. 구글은 NASA와 함께 양자우월성 확인을 위한 연구를 하기로 지난해 합의한 바 있다. 구글은 미국의 기업 IBM과 함께 양자컴퓨터 개발을 위해 세계에서 가장 공격적인 연구개발을 수행 중인 기업이다. 두 기업은 지난해 각각 53(IBM)~72(구글)개의 양자 정보단위(양자비트 또는 큐비트)로 구성된 양자컴퓨터를 개발했다고 발표했다.

이에 대해 경쟁사인 IBM이 어깃장을 놓으면서 논란이 커졌다. IBM은 파이낸셜타임스 등에 IBM 연구소장이 직접 논평을 하며 “구글의 연구 결과를 믿을 수 없다”고 말했다. 21일에는 자사 블로그에 글을 게재하고 논문 사전 공유사이트 ‘아카이브’에 논문까지 공개하며 “구글은 양자우월성에 도달하지 않았다”고 논평했다.

(관련기사 : 구글 양자컴 ‘우월성’ 논란에 경쟁사 IBM “기존 슈퍼컴 완전히 뛰어넘은 것 아냐” 반박)

블로그 글과 논문에서 에드윈 페드노 IBM연구소 석좌연구원과 제이 감베타 IBM 연구소 부소장 등 세 명의 전문가는 “구글 문건은 기존 슈퍼컴퓨터로 1만 년이 걸리는 문제를 새로 개발한 양자컴퓨터 칩으로 3분 20초만에 풀었다고 밝히고 있지만, 우리가 다시 살펴본 결과 그 문제는 현존 슈퍼컴퓨터로 이틀 반이면 훨씬 높은 신뢰성으로 풀 수 있는 문제였다”며 구글이 기존 컴퓨터도 도저히 불가능한 성능을 보인 것은 아니라고 주장했다. IBM은 “이 계산도 아주 보수적으로 잡은 결과이고, 성능을 최적화하면 계산에 드는 자원을 더 줄일 수도 있다”고 덧붙였다.

IBM은 “구글은 기존 슈퍼컴퓨터가 해당 과제 수행을 위해 랜덤액세스메모리(RAM)에 슈뢰딩거 상태벡터(양자역학에서 상태를 나타내는 일종의 변수)를 모두 저장해야 하지만, 그럴 수 없어 공간을 시간으로 치환한 다른 시뮬레이션(슈뢰딩거-파인만 시뮬레이션)에 의존해야 한다고 보고 푸는 데 1만 년이 걸린다고 추정했다”며 “하지만 구글은 기존 컴퓨터에 외부 저장장치가 있다는 사실을 간과하고 있다”고 말했다.

구글이 23일 전격 발표한 네이처 논문 첫 페이지. 네이처 논문 캡쳐

이들은 “기존 컴퓨터는 램과 하드디스크를 이용해 상태벡터를 다루고 저장할 수 있으며, 다양한 기법으로 성능을 높이고 신뢰성까지 확보할 수 있다”며 “구글의 결과는 초전도 회로를 사용하는 53큐비트 장치의 최신 성과를 보여주는 훌륭한 사례지만, 양자 ‘우월’과는 거리가 멀다”고 결론 내렸다. 하지만 네이처가 서둘러 논문을 공개하면서 시커모어의 성능은 기정사실이 됐다.

정 책임연구원은 “구글이 수행한 알고리즘을 기존 컴퓨터로 풀었을 때 양자컴퓨터보다 절대 좋은 성능을 낼 수 없다고 수학적으로 증명되지는 않았기 때문에, 새로운 알고리즘을 개발하고 기술을 총동원하면 기존 컴퓨터로도 양자컴퓨터와 비슷하거나 오히려 더 좋은 성능을 낼 가능성은 언제든 존재한다”며 “이번에 IBM은 이렇게 기존 컴퓨터의 성능을 극대화하는 방법으로 (양자우월성의 기준을 높여) 구글이 양자우월성에 도달하지 않았다고 주장하고 나선 것”이라고 말했다. 그는 “양자우월성은 정의하기 나름이기 때문에 구글이 양자우월성에 도달했는지 여부는 논란이 될 수도 있다”며 “하지만 그렇다고 해서 구글의 시커모어가 현존 최고의 양자컴퓨터 칩이라는 사실은 부정할 수 없을 것”이라고 말했다.

이번 연구는 양자컴퓨터 분야의 큰 이정표가 틀림없다는 게 전문가들이 평이다. 다만 아직은 개발중이므로 무조건적인 낙관이나, ‘이제 모든 암호가 뚫리게 됐다’는 성급한 두려움은 피해야 한다. 아직은 범용 양자컴퓨터가 개발된 게 아니다. 특정 과제에서 성능을 확인했고, 연산 신뢰도를 높여야 하는 등 추가 과제가 많기 때문이다. 정 책임연구원은 “이번 연구 결과는 이 분야의 ‘문샷(미국 아폴로계획의 별명. 극적이고 과감한 도약을 의미)’으로 의미가 크다”며 “동시에 양자컴퓨터 완성에 아직 10여 년 시간이 더 필요하다는 사실도 분명해진 계기”라고 말했다.

양자우월성이 양자컴퓨터가 기존 컴퓨터를 압도하는 개념이 아니라는 사실도 새길 필요가 있다. IBM연구소가 21일 게시한 글에서 에드윈 페드노 IBM연구소 석좌연구원 등은 “양자컴퓨터와 기존 컴퓨터는 각자의 고유한 강점이 있기에 한쪽이 다른 쪽의 우위에 서는 관계가 아니라 오히려 협업하게 될 것”이라고 말했다.

■용어 설명 : 양자컴퓨터

양자컴퓨터는 관측 전까지 양자가 지닌 정보를 특정할 수 없다는 ‘중첩성’이라는 양자역학적 특성을 이용한 컴퓨터다. 물질 이온, 전자 등의 입자를 이용해 양자를 만든 뒤 여러 개의 양자를 서로 관련성을 지니도록 묶는다(이런 상태를 ‘얽힘’이라고 한다). 이렇게 만든 양자를 제어해 정보 단위로 이용한다. 이 정보단위가 큐비트다. 디지털의 정보단위 비트는 0 또는 1의 분명한 하나의 값을 갖지만, 양자정보는 관측 전까지 0이기도 하고 1이기도 하기에 이들이 여럿 모이면 동시에 막대한 정보를 한꺼번에 병렬로 처리할 수 있다. 2개의 큐비트를 예로 들면, 기존의 비트는 00, 01, 10, 11의 네 정보값 가운데 하나만 처리할 수 있지만, 큐비트는 네 개를 모두 동시에 처리할 수 있다.

(원문: 여기를 클릭하세요~)

A programmable quantum computer has been reported to outperform the most powerful conventional computers in a specific task — a milestone in computing comparable in importance to the Wright brothers’ first flights.

Quantum computers promise to perform certain tasks much faster than ordinary (classical) computers. In essence, a quantum computer carefully orchestrates quantum effects (superposition, entanglement and interference) to explore a huge computational space and ultimately converge on a solution, or solutions, to a problem. If the numbers of quantum bits (qubits) and operations reach even modest levels, carrying out the same task on a state-of-the-art supercomputer becomes intractable on any reasonable timescale — a regime termed quantum computational supremacy1. However, reaching this regime requires a robust quantum processor, because each additional imperfect operation incessantly chips away at overall performance. It has therefore been questioned whether a sufficiently large quantum computer could ever be controlled in practice. But now, in a paper in Nature, Arute et al.2 report quantum supremacy using a 53-qubit processor.

Arute and colleagues chose a task that is related to random-number generation: namely, sampling the output of a pseudo-random quantum circuit. This task is implemented by a sequence of operational cycles, each of which applies operations called gates to every qubit in an n-qubit processor. These operations include randomly selected single-qubit gates and prescribed two-qubit gates. The output is then determined by measuring each qubit.

The resulting strings of 0s and 1s are not uniformly distributed over all 2n possibilities. Instead, they have a preferential, circuit-dependent structure — with certain strings being much more likely than others because of quantum entanglement and quantum interference. Repeating the experiment and sampling a sufficiently large number of these solutions results in a distribution of likely outcomes. Simulating this probability distribution on a classical computer using even today’s leading algorithms becomes exponentially more challenging as the number of qubits and operational cycles is increased.

In their experiment, Arute et al. used a quantum processor dubbed Sycamore. This processor comprises 53 individually controllable qubits, 86 couplers (links between qubits) that are used to turn nearest-neighbour two-qubit interactions on or off, and a scheme to measure all of the qubits simultaneously. In addition, the authors used 277 digital-to-analog converter devices to control the processor.

When all the qubits were operated simultaneously, each single-qubit and two-qubit gate had approximately 99–99.9% fidelity — a measure of how similar an actual outcome of an operation is to the ideal outcome. The attainment of such fidelities is one of the remarkable technical achievements that enabled this work. Arute and colleagues determined the fidelities using a protocol known as cross-entropy benchmarking (XEB). This protocol was introduced last year3 and offers certain advantages over other methods for diagnosing systematic and random errors.

The authors’ demonstration of quantum supremacy involved sampling the solutions from a pseudo-random circuit implemented on Sycamore and then comparing these results to simulations performed on several powerful classical computers, including the Summit supercomputer at Oak Ridge National Laboratory in Tennessee (see go.nature.com/35zfbuu). Summit is currently the world’s leading supercomputer, capable of carrying out about 200 million billion operations per second. It comprises roughly 40,000 processor units, each of which contains billions of transistors (electronic switches), and has 250 million gigabytes of storage. Approximately 99% of Summit’s resources were used to perform the classical sampling.

Verifying quantum supremacy for the sampling problem is challenging, because this is precisely the regime in which classical simulations are infeasible. To address this issue, Arute et al. first carried out experiments in a classically verifiable regime using three different circuits: the full circuit, the patch circuit and the elided circuit (Fig. 1). The full circuit used all n qubits and was the hardest to simulate. The patch circuit cut the full circuit into two patches that each had about n/2 qubits and were individually much easier to simulate. Finally, the elided circuit made limited two-qubit connections between the two patches, resulting in a level of computational difficulty that is intermediate between those of the full circuit and the patch circuit.

Figure 1 | Three types of quantum circuit. Arute et al.2 demonstrate that a quantum processor containing 53 quantum bits (qubits) and 86 couplers (links between qubits) can complete a specific task much faster than an ordinary computer can simulate the same task. Their demonstration is based on three quantum circuits: the full circuit, the patch circuit and the elided circuit. The full circuit comprises all 53 qubits and is the hardest to simulate on an ordinary computer. The patch circuit cuts the full circuit into two patches that are each relatively easy to simulate. Finally, the elided circuit links these two patches using a reduced number of two-qubit operations along reintroduced two-qubit connections and is intermediate between the full and patch circuits, in terms of its ease of simulation.

The authors selected a simplified set of two-qubit gates and a limited number of cycles (14) to produce full, patch and elided circuits that could be simulated in a reasonable amount of time. Crucially, the classical simulations for all three circuits yielded consistent XEB fidelities for up to n = 53 qubits, providing evidence that the patch and elided circuits serve as good proxies for the full circuit. The simulations of the full circuit also matched calculations that were based solely on the individual fidelities of the single-qubit and two-qubit gates. This finding indicates that errors remain well described by a simple, localized model, even as the number of qubits and operations increases.

Arute and colleagues’ longest, directly verifiable measurement was performed on the full circuit (containing 53 qubits) over 14 cycles. The quantum processor took one million samples in 200 seconds to reach an XEB fidelity of 0.8% (with a sensitivity limit of roughly 0.1% owing to the sampling statistics). By comparison, performing the sampling task at 0.8% fidelity on a classical computer (containing about one million processor cores) took 130 seconds, and a precise classical verification (100% fidelity) took 5 hours. Given the immense disparity in physical resources, these results already show a clear advantage of quantum hardware over its classical counterpart.

The authors then extended the circuits into the not-directly-verifiable supremacy regime. They used a broader set of two-qubit gates to spread entanglement more widely across the full 53-qubit processor and increased the number of cycles from 14 to 20. The full circuit could not be simulated or directly verified in a reasonable amount of time, so Arute et al. simply archived these quantum data for future reference — in case extremely efficient classical algorithms are one day discovered that would enable verification. However, the patch-circuit, elided-circuit and calculated XEB fidelities all remained in agreement. When 53 qubits were operating over 20 cycles, the XEB fidelity calculated using these proxies remained greater than 0.1%. Sycamore sampled the solutions in a mere 200 seconds, whereas classical sampling at 0.1% fidelity would take 10,000 years, and full verification would take several million years.

This demonstration of quantum supremacy over today’s leading classical algorithms on the world’s fastest supercomputers is truly a remarkable achievement and a milestone for quantum computing. It experimentally suggests that quantum computers represent a model of computing that is fundamentally different from that of classical computers4. It also further combats criticisms5,6 about the controllability and viability of quantum computation in an extraordinarily large computational space (containing at least the 253 states used here).

However, much work is needed before quantum computers become a practical reality. In particular, algorithms will have to be developed that can be commercialized and operate on the noisy (error-prone) intermediate-scale quantum processors that will be available in the near term1. And researchers will need to demonstrate robust protocols for quantum error correction that will enable sustained, fault-tolerant operation in the longer term.

Arute and colleagues’ demonstration is in many ways reminiscent of the Wright brothers’ first flights. Their aeroplane, the Wright Flyer, wasn’t the first airborne vehicle to fly, and it didn’t solve any pressing transport problem. Nor did it herald the widespread adoption of planes or mark the beginning of the end for other modes of transport. Instead, the event is remembered for having shown a new operational regime — the self-propelled flight of an aircraft that was heavier than air. It is what the event represented, rather than what it practically accomplished, that was paramount. And so it is with this first report of quantum computational supremacy.

Nature 574, 487-488 (2019)

(원문: 여기를 클릭하세요~)

A precarious milestone for quantum computing

Quantum computing will suffer if supremacy is overhyped. Everyday quantum computers are still decades away.

Members of Google’s AI quantum team, from left: Charles Neill, Pedram Roushan, Anthony Megrant and team leader John Martinis.Credit: Matt Perko/UCSB

Researchers led by Google’s AI Quantum team have demonstrated ‘quantum supremacy’ by creating a chip that performed a computational task faster than a classical computer. As we report in this issue, an achievement that the researchers say would have taken the world’s fastest supercomputer 10,000 years was completed in under 3 minutes (F. Arute et al. Nature 574, 505–510; 2019).

As the world digests this achievement — including the claim that some quantum computational tasks are beyond supercomputers — it is too early to say whether supremacy represents a new dawn for information technology. It could be that we are looking at quantum computing’s Kitty Hawk moment — a reference to the many decades between the Wright brothers’ first flight at Kitty Hawk in North Carolina, in 1903 and the advent of the jet age. At the very least, quantum computers as a routine part of life are likely to be decades or more into the future.

Still, this achievement in science and engineering should certainly not be underestimated. Research teams around the world have been working intensely to unleash the processing power of quantum phenomena: these include superposition, in which particles seem to have multiple states until they are observed; and entanglement, which describes how the properties of quantum systems can be tied together. If these behaviours can be more precisely controlled, they would generate exponential gains in processing power for certain tasks compared with today’s supercomputers. And that is what the team at Google has achieved.

Its chip, known as Sycamore, comprises just 53 individually controllable superconducting quantum bits (qubits), the basic building blocks of quantum computers. The team chose to calculate the outputs of a random quantum circuit — rather like a quantum random number generator. This is not an easy problem, and the Summit supercomputer at Oak Ridge National Laboratory in Tennessee, the world’s most powerful machine in its class, would have taken 10 millennia to complete it, the researchers say. Sycamore needed only 200 seconds.

Summit can call on more than 9,000 of the most powerful central processing units (8 billion transistors in each) and nearly 28,000 graphics processors (21 billion transistors each). With such raw computing power outgunned by just 53 qubits, it’s understandable that quantum computers are generating such excitement and optimism.

But this demonstration of quantum supremacy is extremely limited. There’s a vast gap to be bridged before quantum computers can do more meaningful things — such as simulating the properties of materials or chemical reactions, or accelerating drug discovery.

For one thing, quantum computers are highly sensitive to environmental noise — including everyday phenomena such as temperature changes and electromagnetic fields. And researchers are a long way from being able to design out these and other obstacles.

Instead of proceeding with caution, a quantum gold rush is under way, with investors joining governments and companies to pour large sums of money into developing quantum technologies. Unrealistic expectations are being fuelled that powerful general-purpose quantum computers could soon be on the horizon. Such misguided optimism could be dangerous for the future of this still-fledgling field.

Such a landscape has created a flourishing network of quantum technologists, but those providing the funding will eventually seek a return on investment. There are already concerns that some firms are over-promising, which is why over-hyping this landmark demonstration could raise expectations further. Researchers fear that, if quantum computers fail to deliver anything useful soon, a ‘quantum winter’ could descend in which research progress slows, investment stalls and disillusion sets in.

The powerful processors that underpin today’s devices such as smartphones were developed from decades of sustained investment — often public investment — in research. Quantum processors will similarly require what innovation economists call ‘patient capital’.

Too often in the history of science and technology, expectations are raised, only for reality to get in the way. Quantum computers are still near the start of a long and unpredictable journey. As they encounter challenges and costs start to mount, researchers must know that they can reach their destination.

Nature 574, 453-454 (2019)

(원문: 여기를 클릭하세요~)

Hello quantum world! Google publishes landmark quantum supremacy claim

The company says that its quantum computer is the first to perform a calculation that would be practically impossible for a classical machine.

The Sycamore chip is composed of 54 qubits, each made of superconducting loops.Credit: Erik Lucero

Scientists at Google say that they have achieved quantum supremacy, a long-awaited milestone in quantum computing. The announcement, published in Nature on 23 October, follows a leak of an early version of the paper five weeks ago, which Google did not comment on at the time.

In a world first, a team led by John Martinis, an experimental physicist at the University of California, Santa Barbara, and Google in Mountain View, California, says that its quantum computer carried out a specific calculation that is beyond the practical capabilities of regular, ‘classical’ machines1. The same calculation would take even the best classical supercomputer 10,000 years to complete, Google estimates.

Quantum supremacy has long been seen as a milestone because it proves that quantum computers can outperform classical computers, says Martinis. Although the advantage has now been proved only for a very specific case, it shows physicists that quantum mechanics works as expected when harnessed in a complex problem.

“It looks like Google has given us the first experimental evidence that quantum speed-up is achievable in a real-world system,” says Michelle Simmons, a quantum physicist at the University of New South Wales in Sydney, Australia.

Martinis likens the experiment to a ‘Hello World’ programme, which tests a new system by instructing it to display that phrase; it’s not especially useful in itself, but it tells Google that the quantum hardware and software are working correctly, he says.

The feat was first reported in September by the Financial Times and other outlets, after an early version of the paper was leaked on the website of NASA, which collaborates with Google on quantum computing, before being quickly taken down. At that time, the company did not confirm that it had written the paper, nor would it comment on the stories.

Although the calculation Google chose — checking the outputs from a quantum random-number generator — has limited practical applications, “the scientific achievement is huge, assuming it stands, and I’m guessing it will”, says Scott Aaronson, a theoretical computer scientist at the University of Texas at Austin.

Researchers outside Google are already trying to improve on the classical algorithms used to tackle the problem, in hopes of bringing down the firm’s 10,000-year estimate. IBM, a rival to Google in building the world’s best quantum computers, reported in a preprint on 21 October that the problem could be solved in just 2.5 days using a different classical technique2. That paper has not been peer reviewed. If IBM is correct, it would reduce Google’s feat to demonstrating a quantum ‘advantage’ — doing a calculation much faster than a classical computer, but not something that is beyond its reach. This would still be a significant landmark, says Simmons. “As far as I’m aware, that’s the first time that’s been demonstrated, so that’s definitely a big result.”

Quick solutions

Quantum computers work in a fundamentally different way from classical machines: a classical bit is either a 1 or a 0, but a quantum bit, or qubit, can exist in multiple states at once. When qubits are inextricably linked, physicists can, in theory, exploit the interference between their wave-like quantum states to perform calculations that might otherwise take millions of years.

Physicists think that quantum computers might one day run revolutionary algorithms that could, for example, search unwieldy databases or factor large numbers — including, importantly, those used in encryption. But those applications are still decades away. The more qubits are linked, the harder it is to maintain their fragile states while the device is operating. Google’s algorithm runs on a quantum chip composed of 54 qubits, each made of superconducting loops. But this is a tiny fraction of the one million qubits that could be needed for a general-purpose machine.

The task Google set for its quantum computer is “a bit of a weird one”, says Christopher Monroe, a physicist at the University of Maryland in College Park. Google physicists first crafted the problem in 2016, and it was designed to be extremely difficult for an ordinary computer to solve. The team challenged its computer, known as Sycamore, to describe the likelihood of different outcomes from a quantum version of a random-number generator. They do this by running a circuit that passes 53 qubits through a series of random operations. This generates a 53-digit string of 1s and 0s — with a total of 253 possible combinations (only 53 qubits were used because one of Sycamore’s 54 was broken). The process is so complex that the outcome is impossible to calculate from first principles, and is therefore effectively random. But owing to interference between qubits, some strings of numbers are more likely to occur than others. This is similar to rolling a loaded die — it still produces a random number, even though some outcomes are more likely than others.

Sycamore calculated the probability distribution by sampling the circuit — running it one million times and measuring the observed output strings. The method is similar to rolling the die to reveal its bias. In one sense, says Monroe, the machine is doing something scientists do every day: using an experiment to find the answer to a quantum problem that is impossible to calculate classically. The key difference, he says, is that Google’s computer is not single-purpose, but programmable, and could be applied to a quantum circuit with any settings.

Google’s quantum computer excels at checking the outputs of a quantum random number generator.Credit: Erik Lucero

Verifying the solution was a further challenge. To do that, the team compared the results with those from simulations of smaller and simpler versions of the circuits, which were done by classical computers — including the Summit supercomputer at Oak Ridge National Laboratory in Tennessee. Extrapolating from these examples, the Google team estimates that simulating the full circuit would take 10,000 years even on a computer with one million processing units (equivalent to around 100,000 desktop computers). Sycamore took just 3 minutes and 20 seconds.

Google thinks their evidence for quantum supremacy is airtight. Even if external researchers cut the time it takes to do the classical simulation, quantum hardware is improving — meaning that for this problem, conventional computers are unlikely to ever catch up, says Hartmut Neven, who runs Google’s quantum-computing team.

Limited applications

Monroe says that Google’s achievement might benefit quantum computing by attracting more computer scientists and engineers to the field. But he also warns that the news could create the impression that quantum computers are closer to mainstream practical applications than they really are. “The story on the street is ‘they’ve finally beaten a regular computer: so here we go, two years and we’ll have one in our house’,” he says.

In reality, Monroe adds, scientists are yet to show that a programmable quantum computer can solve a useful task that cannot be done any other way, such as by calculating the electronic structure of a particular molecule — a fiendish problem that requires modelling multiple quantum interactions. Another important step, says Aaronson, is demonstrating quantum supremacy in an algorithm that uses a process known as error correction — a method to correct for noise-induced errors that would otherwise ruin a calculation. Physicists think this will be essential to getting quantum computers to function at scale.

Google is working towards both of these milestones, says Martinis, and will reveal the results of its experiments in the coming months.

Aaronson says that the experiment Google devised to demonstrate quantum supremacy might have practical applications: he has created a protocol to use such a calculation to prove to a user that the bits generated by a quantum random-number generator really are random. This could be useful, for example, in cryptography and some cryptocurrencies, whose security relies on random keys.

Google engineers had to carry out a raft of improvements to their hardware to run the algorithm, including building new electronics to control the quantum circuit and devising a new way to connect qubits, says Martinis. “This is really the basis of how we’re going to scale up in the future. We think this basic architecture is the way forward,” he says.

Nature 574, 461-462 (2019)

(원문: 여기를 클릭하세요~)